Industrialization

Quickly industrialize your AI algorithm into a production environment

Quickly industrialize your AI algorithm into a production environment

Proof of concept about AI projects tailored for your use case

State of the art machine learning algorithm at your service

We will help you in choosing the best AI strategy depending on your setup.

Your AI project was a failure ? We will advise you on how to make it successful

We make artificial intelligence algorithm to augment your intelligence

AI as a service for computer vision

AI as a service for natural language processing

Latest projects

Spreadauto project:

Use of NLP to detect, extract and parse financial tables.

Creation of a docker image.

Valuation Report project:

Setup an LLM with VLLM on docker along its deployment with jenkins and ansible with queuing capabilities.

Setup in production an LLM with RAG technologies to extract financial data.

Creation of a REST API along a DB persistency, an MlOps and Devops pipeline.

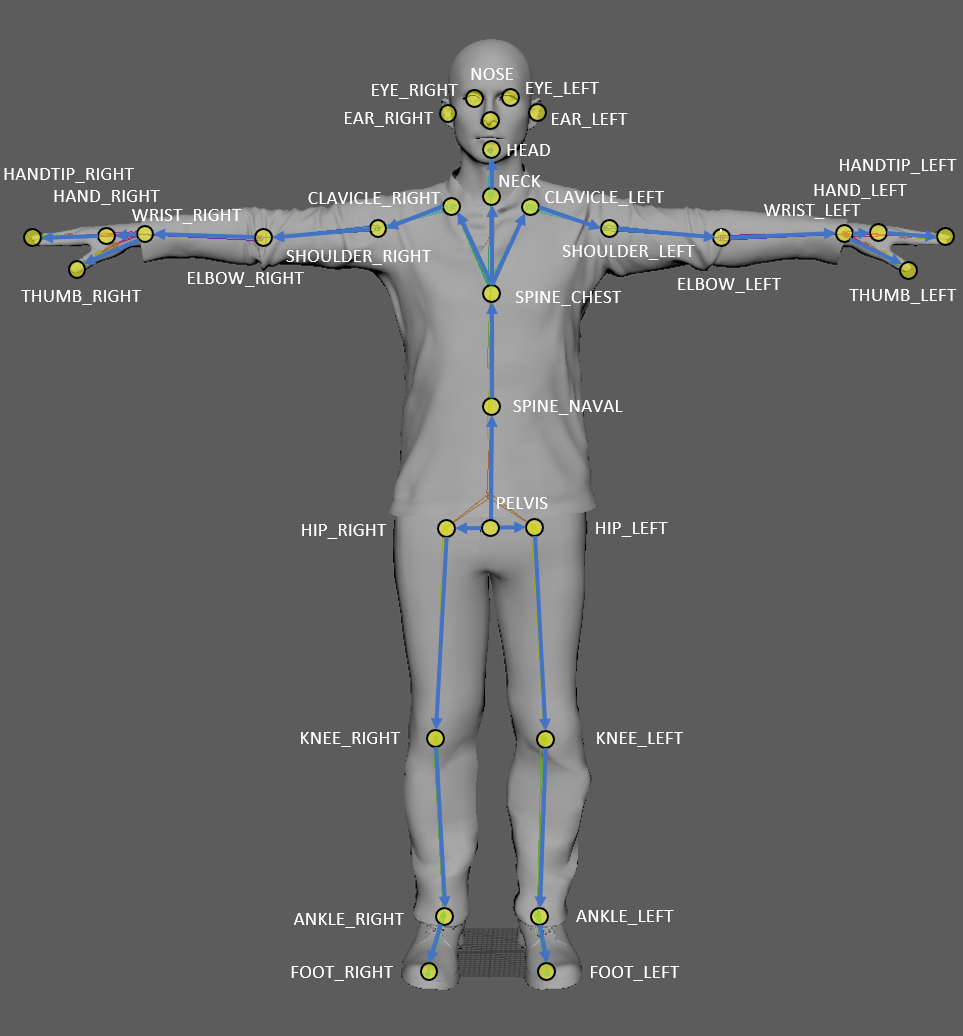

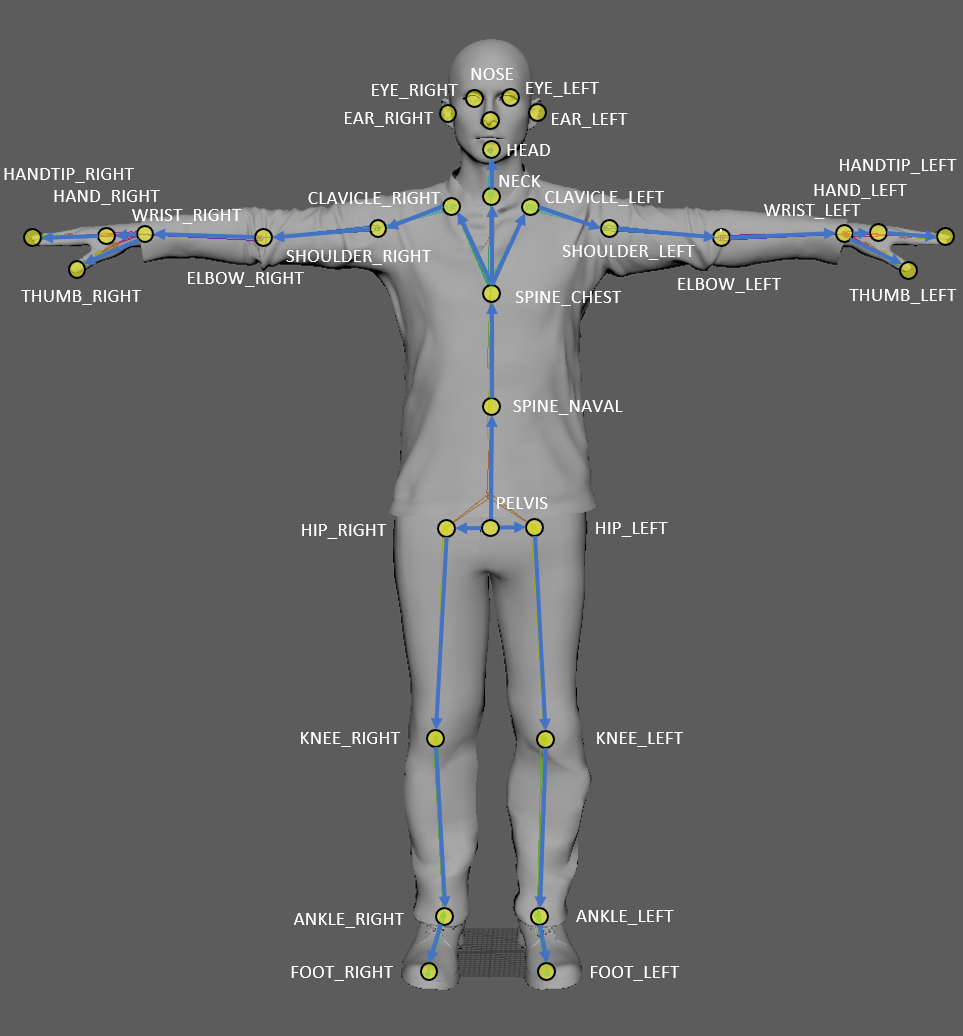

This project consist in a creation of a several filters algorithm on top of a kinect pose detection to smooth and improve real time light saber position inside a unity project.

GinoLegalTech is a company which business consist to automate and manage legal contract.

Every contract build inside Gino workflow has a skeleton known as Logical Question Answering (LQA).

This LQA is composed with several question-answers items such as:

Question: What is the parties

Answer: "GinoLegalTech"

The main task was to obtain this LQA from any external contract using nlp skills.

This project consist in a creation of a OCR / translation service. It can detect and replace the text content of a picture.

This project consist in a creation of a machine learning algorithm which detect entities from a picture (person, object, car, etc ...)

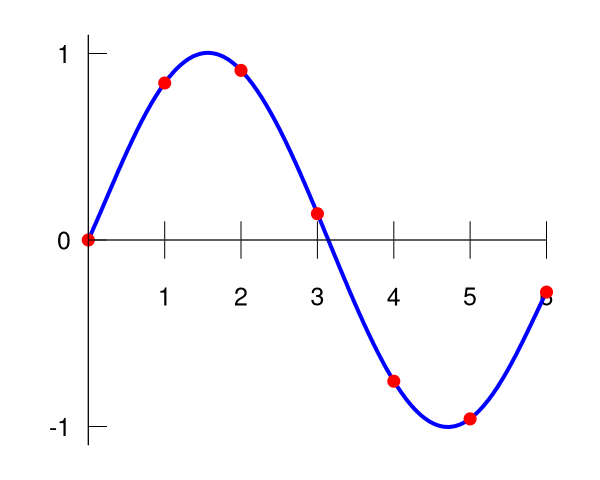

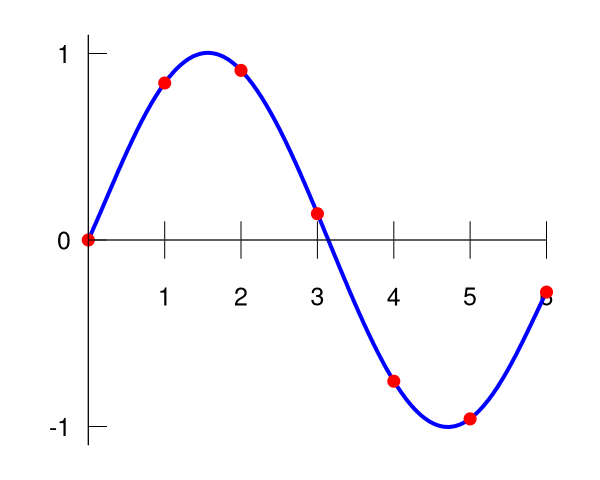

This project consist in a creation of a machine learning algorithm based on perceptron which interpollate points in order to build a curve.

This project consist in a creation of a machine learning algorithm which detect faces and replace it by a smiling emoji if the face is smiling or a non smiling emoji.

This project has for objective to optimize prediction of stock exchange. The main idea consisted in extrapolating stock share option data in real time. It is separated in several distinct sub project.

1. Obtaining a data set

2. Deep and machine learning algorithm analysis

3. Optimization to have less than 1 second in processing time

4. Display results with a minimum latency

Total is a major company in oil and gas industry, present all over the world.

The project consisted in training a neural network to model seismic attributes in order to find underground oil. This task required both geophysics and machine/deep learning skills.

VMPS - ProfenPoche is a company which sell online course subscription for math.

This project has allowed the creation of a chat bot which can solve step by step math exercise and send math course about the mathematical subject taken by a camera.

Achievements

Spreadauto project:

Use of NLP alogirthm to detect, extract and parse financial tables.

Code base refactorisation with updates from old to new python version.

Retraining models and build its automatic workflow.

Creation of a docker container to be used with kubernetes.

Valuation Report project:

Setup an LLM with VLLM on docker along its deployment with jenkins and ansible with queuing capabilities on a server with 4 A100 Nvidia GPU.

Setup in production an LLM with RAG technologies to extract financial data from financial reports.

Creation of a REST API along a DB persistency, an MlOps and Devops pipeline using Jenkins and kubernetes for production.

General description

The Spreadauto project aims to make it easier to work with financial data by using advanced technology to automatically detect and organize financial tables. Here's how it works in simple terms:

Understanding and Extracting Data: The project uses something called Natural Language Processing (NLP), which is a type of technology that helps computers understand human language. In this case, it helps the system identify and extract important financial tables from documents (like spreadsheets or reports), making the data easier to analyze.

Updating Old Code: The project also involves updating the code that runs the system. The old code was written using an older version of Python (a programming language), and it’s being rewritten to work with a newer, more efficient version. This ensures that the system runs smoothly and can take advantage of the latest improvements in the language.

Improving the System: The models (the "brains" of the system) that make decisions are being retrained, or "taught" again, to improve accuracy and handle new types of data. The goal is to make sure the system works even better and more automatically over time.

Automation and Scalability: Lastly, the project aims to make everything run more automatically and efficiently. A Docker container is being created, which is a tool that packages everything needed to run the system into one neat "box." This box can then be easily deployed on larger computer systems (using Kubernetes), allowing the project to handle a lot of data at once and scale up as needed.

In short, the Spreadauto project is about creating a smarter, more efficient way to handle financial data, making it easier for companies to work with and analyze that data without having to do everything by hand.

The Valuation Report project is designed to automate and enhance the process of extracting valuable financial data from reports using advanced technology. Here's how it works in simple terms:

Setting Up a Powerful System: The project starts by setting up a powerful system that can handle lots of data quickly and efficiently. This involves using a special tool called VLLM (a type of system for working with language models), which is packaged inside something called a Docker container. Think of Docker as a "box" that makes sure all the technology needed to run the system is in one place and can be easily moved around. This system will run on a server with 4 high-performance Nvidia A100 GPUs, which are specialized processors designed to handle complex calculations very fast, like a supercharged engine for the technology.

Extracting Financial Data: The heart of the project involves using a type of technology called LLM with RAG (Language Models with Retrieval-Augmented Generation). This technology helps the system "read" and "understand" financial reports, pulling out useful data like figures, tables, and other key details. The system then extracts this information to make it easier to analyze.

Building a REST API and Database: A REST API is created, which is a way for different systems to communicate with each other over the internet. In simple terms, it’s like a “translator” that allows other applications to request and get data from the system. The data that the system extracts is stored in a database, ensuring it is organized and can be accessed easily when needed.

Automating and Managing Everything: The project also focuses on automating the entire process, making it smooth and efficient. Jenkins is used to manage automation, making sure everything happens at the right time without manual intervention. Ansible helps configure and maintain the system, while Kubernetes ensures that it runs smoothly at scale, even when there’s a lot of data to process. The system is built to handle everything from training the model to deploying it into production and ensuring the system runs without interruption.

In summary, the Valuation Report project automates the extraction of financial data from reports, builds a system that can scale to handle large amounts of data quickly, and ensures that everything runs smoothly and automatically in production. This helps financial analysts and businesses save time and get more accurate insights from their reports.

This project consist in a creation of a several filters algorithm on top of a kinect pose detection to smooth and improve real time light saber position inside a unity project.

What has been done:

1. Extraction of kinect pose detection output

2. Parametrize and fine tuning of Kalman and oneeuro real time filters

3. Conception of a python library to deploy easily over unity

4. Real time post processing using zeroMQ

GinoLegalTech is a company which business consist to automate and manage legal contract.

Every contract build inside Gino workflow has a skeleton known as Logical Question Answering (LQA).

This LQA is composed with several question-answers items such as:

Question: What is the partie ?

Answer: "GinoLegalTech"

The main task was to obtain this LQA from any external contract using nlp skills.

Such task has been done using image processing, OCR, word embedding, classification, NER and depency parser.

Extraction are various and can be added by anyone.

Link between such extraction are supported.

Workflow from user experience are trained to improve all the models.

Other task such as clause detection which need proofreading and differences between two texts has also be done.

Making annotation application tools in Qt to optimize it.

Creating some tools for MLops and Devops optimization (Docker, Automatic models updates with their metrics, etc ...)

Collaborating with cross-functional teams and customers to identify product improvements.

Managing and implementing the project in a SaaS solution with a developers team based in Shenzhen

This project consist in a creation of a OCR / translation service. It can detect and replace the text content of a picture.

What has been done:

1. OCR via Tesseract

2. Algorithm to detect text colors

3. Translation based on transformer

5. Containerize this application with Docker in order to deploy it easily

This project consist in a creation of a machine learning algorithm which detect entities from a picture (person, object, car, etc ...)

What has been done:

1. Refactorisation of a YoloV7 algorithm

2. Creation of an opensource library Imlab

3. Creation of ML ops and Dev ops pipeline

5. Containerize this application with Docker in order to deploy it easily

This project consist in a creation of a machine learning algorithm based on perceptron which interpollate points in order to build a curve.

What has been done:

1. Creation of a perceptron algorithm in Cython

2. Optimisation of this algorithm

3. Accept input from CSV and display it on Vispy / D3

This project consist in a creation of a machine learning algorithm which detect faces and replace it by a smiling emoji if the face is smiling or a non smiling emoji.

What has been done:

1. Creation of a smiling dataset

2. Use of a computer vision algorithm to detect a face

3. Training of a vision machine learning algorithm to detect if a face is smiling or not

4. Conception and optimization of the relatives pipeline to run it on live video

5. Containerize this application with Docker in order to deploy it easily

This project has for objective to optimize prediction of stock exchange. The main idea consisted in extrapolating stock share option data in real time. It is separated in several distinct sub project.

1. Obtaining a data set: to obtain such data, a web scrapping python program has to be developed. It uses selenium and beautifulsoup to parse html data. It must also be in real time. As soon as the web scrapping application took the stock share option information, it is stocked on a web server in a redis database.

2. Use deep and machine learning algorithm to extrapolate those data: LSTM, percrepton, random forest and a real time peak detection has been used to take the best decision compared to the risk.

3. Optimization - speed calculation: every algorithm has been optimized to process the result with less than 1 second thanks to C++ and fortran algorithm conversion.

4. Visual display: tools such as OpenCL and Vispy has been used to display such result with a latency of less than 30 ms.

Total is a major company in oil and gas industry, present all over the world.

The project consisted in training a neural network to model seismic attributes in order to find underground oil. This task required both geophysics and machine/deep learning skills.

Within the framework of locating oil methodologies, the main issue is the large process time of seismic data. In order to image the underground, one must realize seismic acquisition through sound wave propagation. The records of seismic waves data are done depending on time. One seeks to convert time into depth: this process is the inversion. After the inversion, data are processed to obtain specific seismic attributes (for example, rock porosity) in order to find potential oil.

Based on well data and seismic profiles, deep learning is used to speed up all the process. Indeed, well data are used to learn the association between seismic attributes and well's seismic profile, then a generalization is done on the entire seismic profile.

VMPS - ProfenPoche is a company which sell online course subscription for math.

This project has allowed the creation of a chat bot which can solve step by step math exercise and send math course about the mathematical subject taken by a camera.

What has be done:

• Creation of an Artificial Intelligence for a scholar application: for a given photo, with Tesseract OCR, send to students the course and exercises content associated with that image. Detects the presence of equations and converts them into LaTeX format. Can also solve some mathematical problems

• Development of a neural network based on the seq2seq model to improve the artificial intelligence result

• Use of growth hacking method for a mobile application; impact evaluation of several marketing approaches

• Contribution in the Business Plan development and the company financial analysis

• Speed and precision improvement of the artificial Intelligence created previously.

• Creation of another artificial Intelligence to solve any mathematical problem from middle to high school “step by step” by creating a LaTeX sheet